Or at least that’s what I saw for MorphAdorner (97.1%) and LingPipe (97.0%) I wasn’t able to retrain the Stanford tagger on my reference data, so I can’t do anything other than accept Stanford’s reported cross-validation numbers on non-literary data, which are 96.9% (for the usably speedy left3words model) and 97.1% (for the very slow bidirectional model). My own cross-validation tests tend to confirm what the projects themselves claim, namely that they’re about 97% accurate on average. What I do have is cross-validation results, and the deeply inconclusive bag-of-tags trials I’ve described previously.

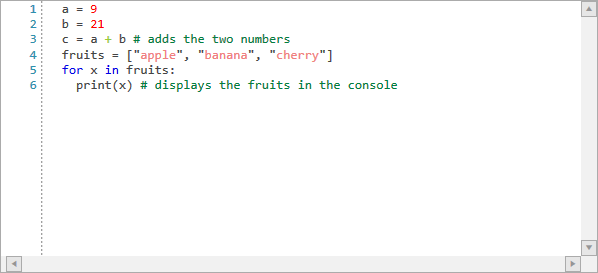

#BEST POS TAGGER PYTHON CODE#

decision trees, for example, nor the quality of the code in each package. The underlying algorithms that each tagger implements make a difference, but I’m really not qualified to evaluate the relative merits of hidden Markov models vs. This is probably the most important issue, but it’s also the most difficult for me to assess. Here’s a summary of the considerations that influenced my decision: Accuracy The good news overall, though, is that several of the other taggers would also be adequate for my needs, if necessary. In short: I’m going to use MorphAdorner for my future work. There’s a lesson here, surely, about research work in general, my coding skills in particular, and the (de)merits of being a postdoc.

This for a project that I thought would take a week or two. OK, I’m as done as I care to be with the evaluation stage of this tagging business, which has taken the better part of three months of intermittent work.

0 kommentar(er)

0 kommentar(er)